Storage

Container Storage Interface

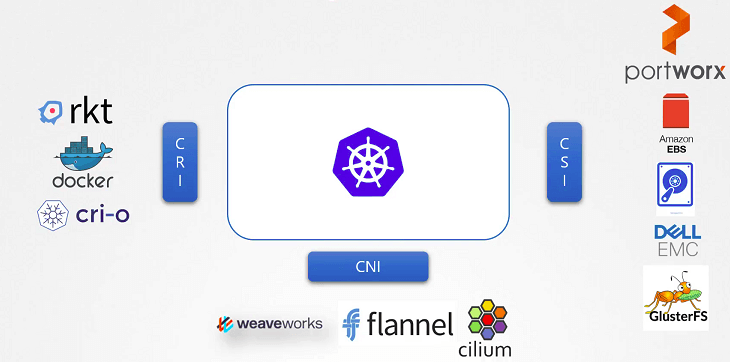

- CRI; The Container Runtime Interface is a standard that defines how an orchestration solution would communicate with container runtime like docker. If any new CRI is developed, they can simply follow the CRI standards.

- CNI; The Container Network Interface was developed to support different networking solutions. Any new networking vendors could simply develop their plugins based on the CNI standards and make their solution work with Kubernetes.

- CSI;

- The container storage interface was developed to support multiple storage solutions. With CSI, you can now write your own drivers for your own storage to work with Kubernetes.

- Vendors like AWS EBS, Azure DISK, NetApp, Dell EMC Isilon, GlusterFS, etc.. have their own CSI drivers.

- It is important to note that CSI is a universal standard and if implemented, allows any container orchestration tool to work with any storage vendor with a supported plugin

Volumes in Kubernetes

- Pods are transient. When a Pod is created to process data and then deleted, the data processed by it gets deleted as well.

- To persist data, we attach a volume to the Pod. The data processed by the Pod is now stored in the volume, and even after the Pod is deleted, the data remains.

apiVersion: v1 kind: Pod metadata: name: counter-pod spec: containers: - name: counter-container image: ubuntu:alpine volumeMounts: - mountPath: /numbers name: counter-vol volumes: - name: counter-vol hostPath: path: /tmp/numbers- Options;

- The hostPath option is used to configure a directory. (not recommended for use in a multi-node cluster).

- Kubernetes supports several types of standard storage solutions such as NFS, glusterFS, Flocker, CephFS, and AWS EBS.

- To configure an AWS EBS as the a volume, we use the field

awsElasticBlockStorewithvolumeIDandfsType

- To configure an AWS EBS as the a volume, we use the field

Persistent Volumes

- Persistent volumes allow you to manage storage centrally.

- A persistent volume is a cluster-wide pool of storage volumes configured by an administrator to be used by users deploying applications on the cluster. The users can now select storage from this pool using persistent volume claims.

apiVersion: v1 kind: PersistentVolume metadata: name: pv-vol spec: accessModes: - ReadWriteOnce capacity: storage: 1Gi hostPath: path: /data persistentVolumeReclaimPolicy: Retain- accessModes; defines how a volume should be mounted on the hosts.

0 ReadOnlyMany 1 ReadWriteOnce 2 ReadWriteMany

- Capacity; used to specify the amount of storage to be reserved for this persistent volume.

- Volume type; we used hostPath which uses storage from the nodes local dir.

- When the PVC is deleted, the PV by default is set to retain meaning the PV will remain until it is manually deleted by the admin. It is not available for reuse by any other claims.

Retain default for manually created PVs. Delete default for dynamically provisioned PVs. Recycle Deprecatd.

Persistent Volumes Claims

- PV and PVC are two separate objects, An admin creates a set of PV and a user creates PVC to use the PV.

- Once the PVC is created, Kubernetes binds the PV to claims based on the request properties set on the volume.

- Every PVC is bound to a single PV since there is a 1→1 relationship between claims and volumes. You can use a particular volume by using labels and selectors. However, During the binding process, Kubernetes tries to find a PV that has a sufficient capacity as requested by the claim and any other request properties such as accessModes, StorageClass, etc.

apiVersion: v1 kind: PersistentVolume metadata: name: pv-vol spec: capacity: storage: 500Mi accessModes: ["ReadWriteMany"] hostPath: path: /data --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: my-pvc spec: accessModes: ["ReadWriteMany"] resources: requests: storage: 250Mi --- apiVersion: v1 kind: Pod metadata: name: mypod spec: containers: - name: mycontainer image: busybox volumeMounts: - mountPath: /logs name: mypodclaim volumes: - name: mypodclaim persistentVolumeClaim: claimName: my-pvc

Storage Classes

- When using storage from a cloud provider, we need to manually provision the storage before we can create a PV using it. This is called Static Provisioning.

- In dynamic provisioning, a provisioner is created which can automatically provision storage on the cloud and attach them to the pod when the claim is made. This is achieved by creating a StorageClass object.

- When using Storage classes, we don’t need to create PVs manually. We create a PVC with a storage class, and the storage class will handle the PV creation and bind it to the PVC.

apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: gcp-storage provisioner: kubernetes.io/gce-pd parameters: type: pd-ssd replication-type: regional-pdprovisioner; depends on the type of underlying storage being used.kubernetes.io/no-provisionermeans dynamic provisioning is disabled.

- Using parameters, we can create classes of storage.

- ReclaimPolicy and VolumeBindingMode;

reclaimPolicydefines the behavior of the PV when the PVC is deleted.- delete; delete the PV when the PVC is deleted.

- Retain; retain the PV when the PVC is deleted.

volumeBindingModedefines when the volume should be created and bound to a PVC.- WaitForFireConsumer; wait for a pod to use the PVC

- Immediate; immediately create a volume and bind it to the PVC.

apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: gcp-storage provisioner: kubernetes.io/gce-pd reclaimPolicy: Delete volumeBindingMode: WaitForFirstConsumer