Security

Security Primitives

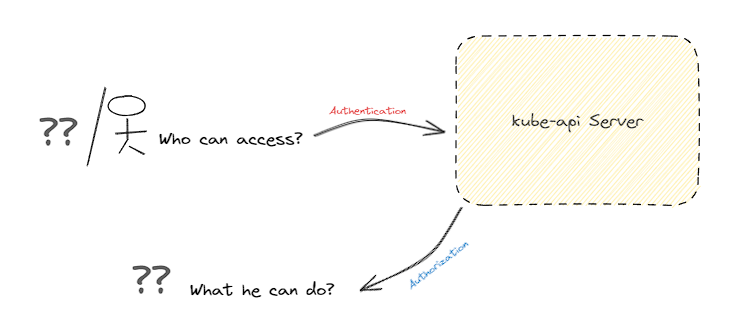

- As we already know, the Kube-API server is at the center of all operations within Kubernetes. We interact with it through the

kubectlutility or by accessing the API directly, and with that, you can perform any operation on the cluster.

- Securing the API server is very important, thus two decisions should be made. Who can access? and what they can do? Authentification and Authorization.

Authentication

Different ways can be used to authenticate to the API server.

→ User IDS and passwords stored in a static file

password, username, UID, Groups(opt)→ Tokens

→ Certificates

→ Integration with external authentication providers like LDAP.

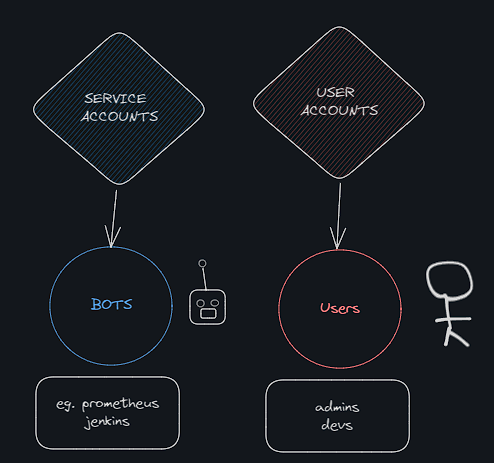

⇒ For machines we use Service Accounts.

💡For the basic authentication, make sure to pass the file path within the kubeapi-server.service file--basic-auth-file=user-details.csvand restart the service.

Same when using

tokens to authenticate--token-auth-file=user-details.csv.

+ consider volume mounts.While Authenticating use: # Basic auth $ curl -v -k https://10.0.1.2:6443/api/v1/pods -u "<user>:<password>" # Token auth $ curl -v -k https://10.0.1.2:6443/api/v1/pods --header "Authorization: Bearer <token>"Authorization

Once the access is gained within the cluster, you define what operations X can perform.

- Authorization is implemented using Role-Based-Access-Control, where users are associated to groups with specific permissions.

- Other authorization modules like Attribute-Based-Access-Control, Node Authorizer, Webhooks, etc.

++ Adds

- All communication between various components is secured using TLS encryption.

- All communication between applications within the cluster is restricted using Network policies.

- As we already know, the Kube-API server is at the center of all operations within Kubernetes. We interact with it through the

TLS in Kubernetes

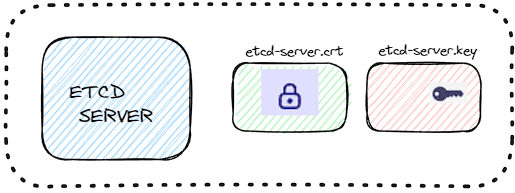

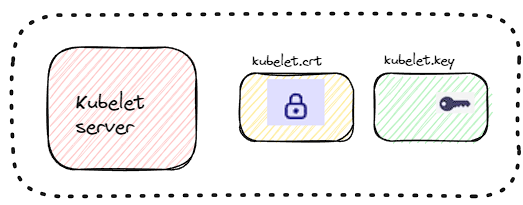

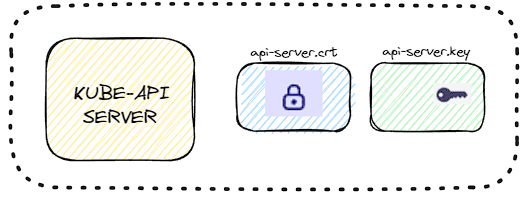

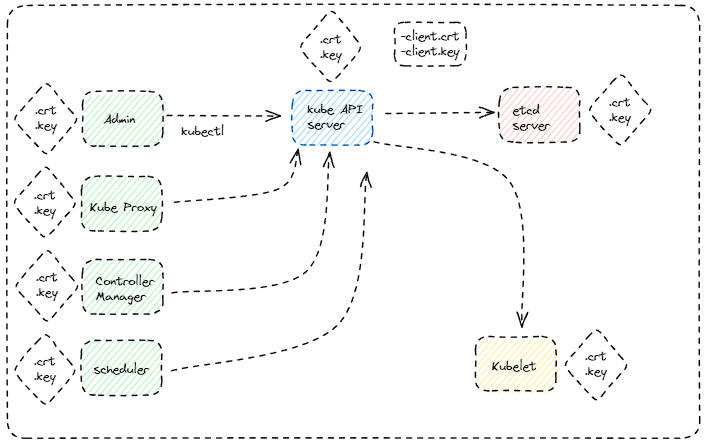

- Communication between all the components within the Kubernetes should be secured using the TLS certificates. All services within the cluster should use server certificates, and all clients should use client certificates to verify who they are.

Certificates for servers

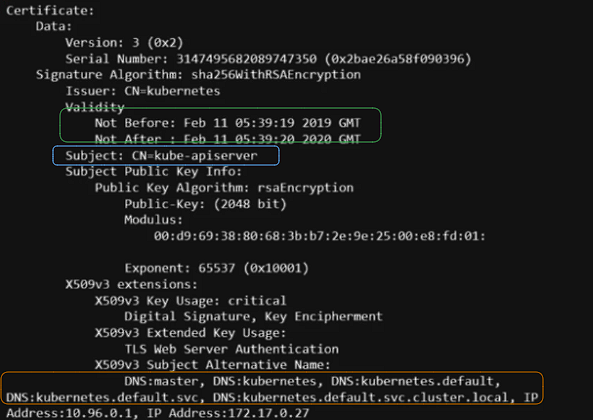

- The kube-API server exposes an HTTP service to allow users/components to manage the cluster. Hence, It requires a certificate to secure all communications with its clients.

- The kube-API server exposes an HTTP service to allow users/components to manage the cluster. Hence, It requires a certificate to secure all communications with its clients.

Certificates for clients

- The admin responsible for managing the cluster requires a certificate and key pair to authenticate to the Kube-API server.

- The Kube scheduler also interacts with the Kube-API server to look for pods that require scheduling, Hence, it needs to validate its identity using a client TLS certificate.

- The Kube Controller Manager also communicates with the Kube-API server so a certificate and key pair are needed to authenticate to the API server.

- The Kube proxy also needs to authenticate to the Kube-API server, thus it needs to generate a certificate and key pairs to verify its identity.

- The Kube API server communicates to the etcd server and to the Kubelet server as a client, you can either use the same keys generated for serving its own API service Or you can generate a new pair of certificates specifically for the Kube API server to validate its identity.

💡Public keys are usually named “.crt | .pem” extensions. → server.crt/server.pem for server - client.crt/client.pem for clients.Private keys are usually named “.key | *-key.pem” extensions. → server.key/server-key.pem for server - client.key/client-key.pem for clients.

# Sign a new certificate for the apiserver-etcd-client openssl x509 -req -in /etc/kubernetes/pki/apiserver-etcd-client.csr -CA /etc/kubernetes/pki/etcd/ca.crt -CAkey /etc/kubernetes/pki/etcd/ca.key -CAcreateserial -out /etc/kubernetes/pki/apiserver-etcd-client.crt

TLS Certificate Creation and Details

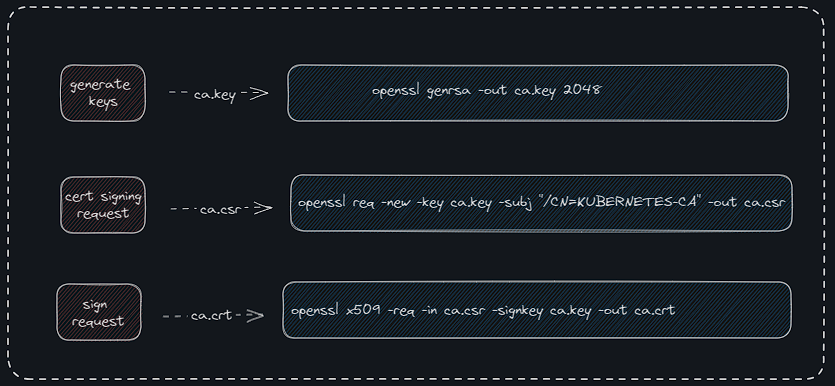

Starting with the CA certificates;

openssl genrsa -out ca.key 2048→ to create a private key

openssl req -new -key ca.key -subj "/CN=KUBERNETES-CA" -out ca.csr→ using the private key to generate a cert signing request “.csr” which is like a certificate with all of your details but with no signature. CN stands for “common name”.

For admin user;

openssl genrsa -out admin.key 2048→ generate a private key

openssl req -new -key admin.key -subj "CN=kube-admin" -out admin.csr→ create a certificate sign request.

openssl x509 -req -in admin.csr -CA ca.crt -CAkey ca.key -out admin.crt→ creating the certificate but this time we use the ca.crt + ca.key.

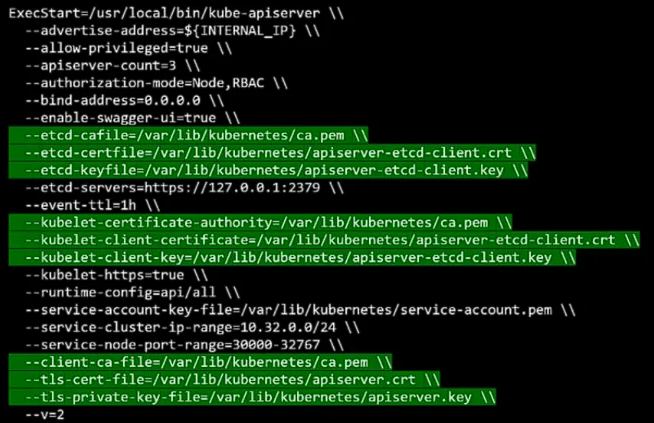

Specifying keys;

Details;

cat /etc/kubernetes/manifests/kube-api.yml|ps -ef | grep "kube-api"→ Within the manifest file or within the kube-api.service, you can find a list of TLS certificates used.

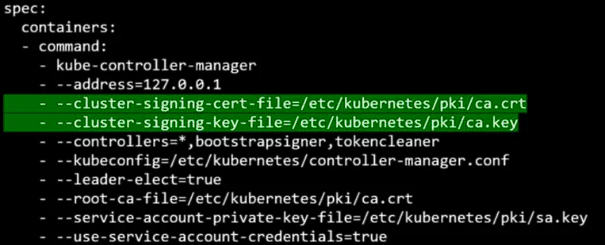

Certificates API

- Kubernetes has a built-in Certificates API that allows you to send a Certificate Signing Request directly to Kubernetes and rotate certificates when they expire. Instead of logging onto the master node and signing the certificate, you create a Kubernetes API object called

CertificateSigningReque itst. Once done, all certificates signing requests can be seen, reviewed; and approved.

apiVersion: certificates.k8s.io/v1 kind: CertificateSigningRequest metadata: name: chxmxii spec: groups: - system:authenticated usages: - digital signature - key encipherment - server auth request: <base64 encoded csr>kubectl get csr→ to get a list of csrs

kubectl certificate approve chxmxii→ to approve a certificate

kubectl certificate approve chxmxii -o yaml→ to view the certificate and to share it with the end user (must be b64 decoded).

- Kubernetes has a built-in Certificates API that allows you to send a Certificate Signing Request directly to Kubernetes and rotate certificates when they expire. Instead of logging onto the master node and signing the certificate, you create a Kubernetes API object called

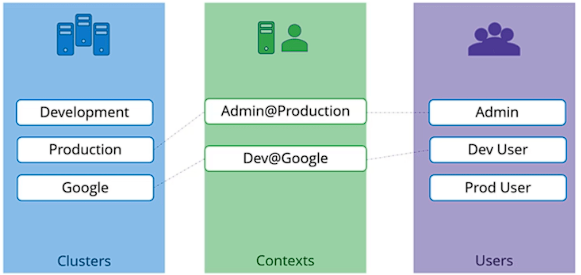

KubeConfig

- By default, the kubectl tool looks for a file named ~/.kube/config, this file can be used to store some information such as the client-key, client-cert, ca, or the Kubernetes server you are interacting with!

- the KubeConfig file has 3 sections;

- Clusters;

- Say you manage more than one cluster, you can save all the Kubernetes clusters that you need access to here.

- Users;

- User accounts with which you have access to these clusters

apiVersion: v1myku kind: Config clusters: - name: mykubeplayground cluster: name: development certificate-authority: /path/to/ca.crt server: https://mykubepg:6443 users: - name: mykubeAdm user: client-certificate: path/to/admin.crt client-key: path/to/admin.key contexts: - name: mykubeAdm@mykubeplayground context: cluster: mykubeplayground user: mykubeAdm namespace: dev - Clusters;

kubectl config use-context mykubeAdm@mykubeplayground→ to change context

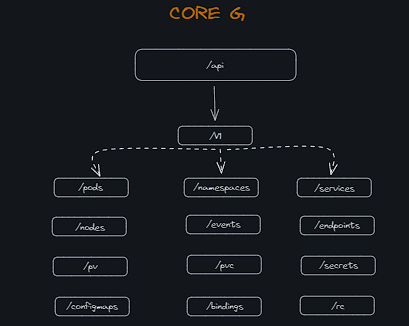

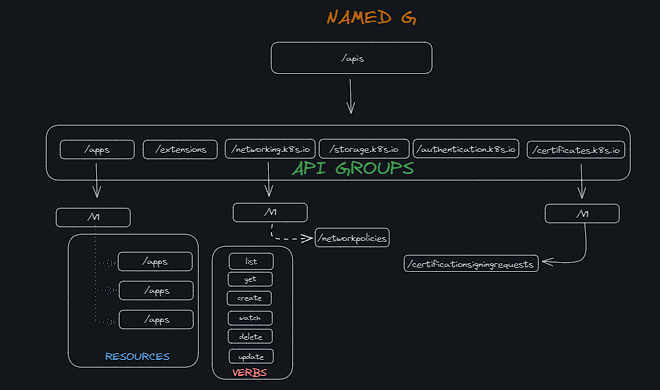

API Groups in Kubernetes

- The Kubernetes API is grouped into multiple groups based on their purpose, such as;

- /version → for viewing the version of the cluster.

- /metrics

- /healthz → for monitoring the health of the cluster.

- /logs → integrating with third-party logging apps

To view the API group for each object you can access your Kube API server at port 6443.

$ curl http://localhost:6443 -k --key admin.key --cert admin.crt --cacert ca.crt- An alternate option is to start the kubectl proxy client at port 8001.

- The Kubernetes API is grouped into multiple groups based on their purpose, such as;

Role-Based Access Controls

- You can create a role by creating a role object.

apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: namespace: default name: developer rules: - apiGroups: [""] # "" indicates the core API group resources: ["pods"] verbs: ["get", "list", "create", "delete", "update"] resourceNames: ["pod1","pod2"]

- For the core group, you can leave the apiGroups section blank, and for any other group, you specify the group name.

- After creating the role, we need to link the user to it, for this, we need to create another object called RoleBinding, this object links a user to a role.

apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: namespace: default name: devuser-developer-binding subjects: - kind: user name: dev-user apiGroup: rbac.authorization.k8s.io roleRef: kind: Role name: developer apiGroup: rbac.authorization.k8s.io

- The RoleBinding object has two sections, the subjects are where we specify the user details, and The roleRef section is where we provide the details of the role we created.

kubectl auth can-i create deployments→ to check if you have permission to create a deploy.

kubectl auth can-i create pods --as dev-user --name-space prod→ using th as user option to check access for the dev-user in the prod ns.

- You can create a role by creating a role object.

Service Accounts

- Kubernetes has 2 types of accounts:

- User Accounts (cluster-wide); used by humans

- Default Service Account;

- Using custom Service Accounts;

apiVersion: v1 kind: Pod metadata: name: webapp spec: container: - name: nginx image: nginx serviceAccountName: monitor-sa # autmountServiceAccountToken: false # this will prevent the default service account token from being autoo-mounted to the pod.💡The SA of a pod cannot be updated, the pod must be deleted and re-created with a different SA. However, the SA in a deployment can be updated as the deployment takes care of deleting and recreating the pods.

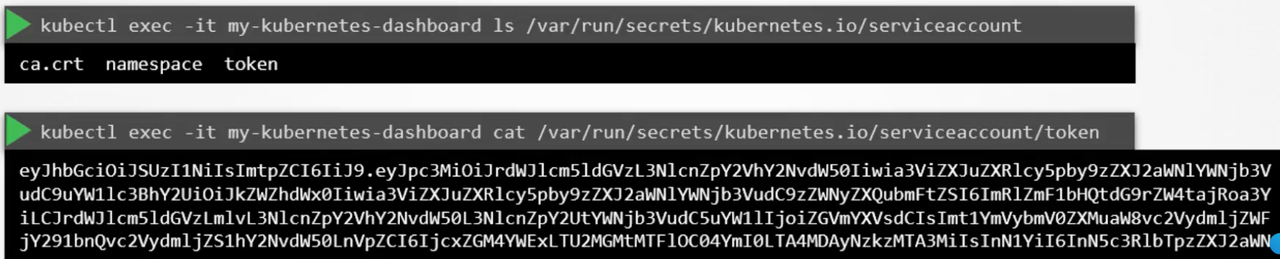

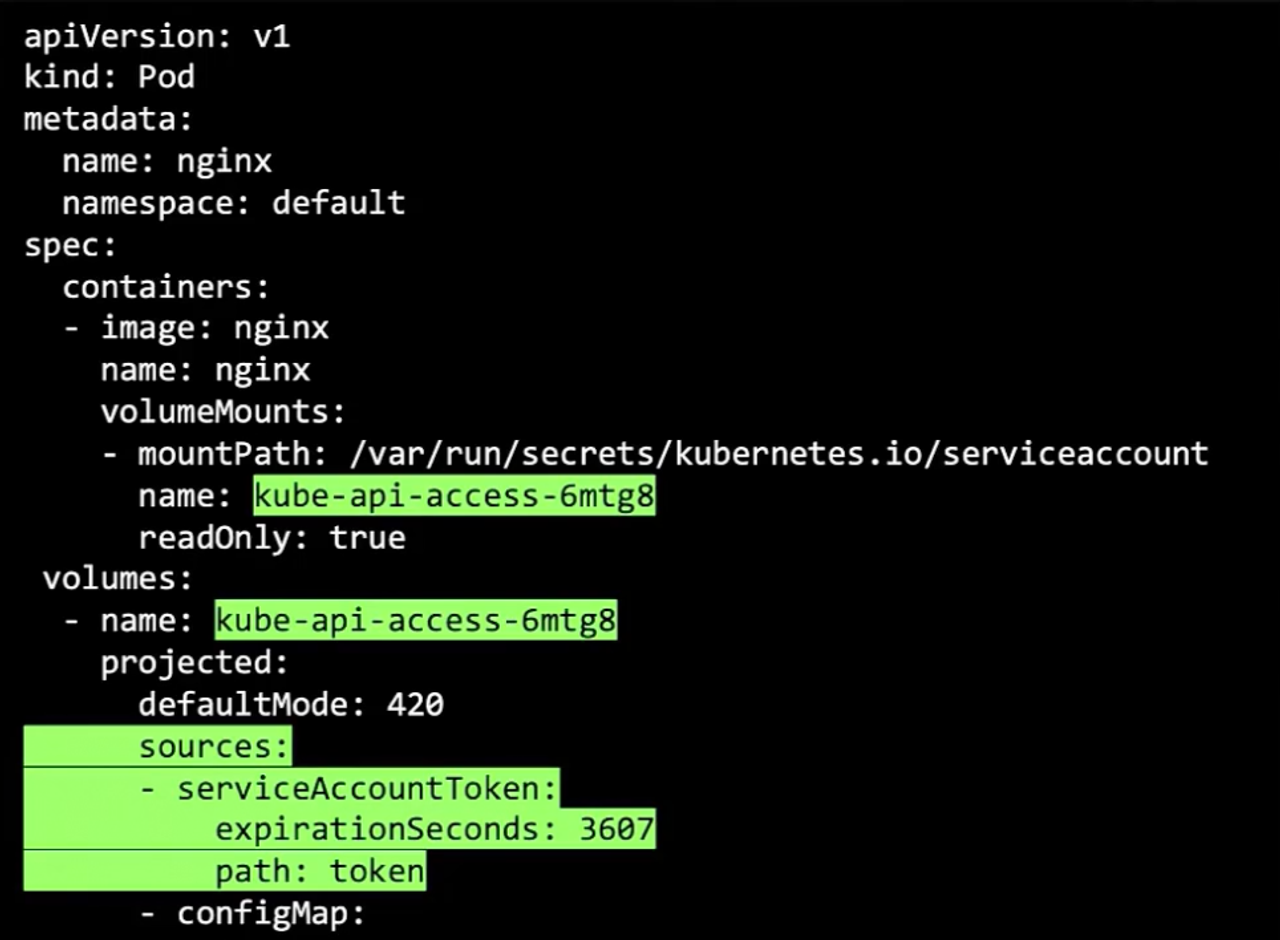

- From v1.24 onwards, The

TokenRequestAPIis used to provision tokens for service accounts, these tokens are audience-bound, time-bound, and object-bound. Hence, they are more secure.

kubectl create token <sa_name>→ this will create a token with a default validity of 1h and can be modified by passing some args when creating the token. the token is then mounted to the pod as aa projected volume.

- You can limit the SA access using RBAC.

apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: name: pod-reader rules: - apiGroups: [''] resources: ['pods'] verbs: ['get', 'watch', 'list'] --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: read-pods subjects: - kind: ServiceAccount name: dashboard-sa #NO apiGroup should be specified when subject kind is service account roleRef: kind: Role name: pod-reader apiGroup: rbac.authorization.k8s.io

- Kubernetes has 2 types of accounts:

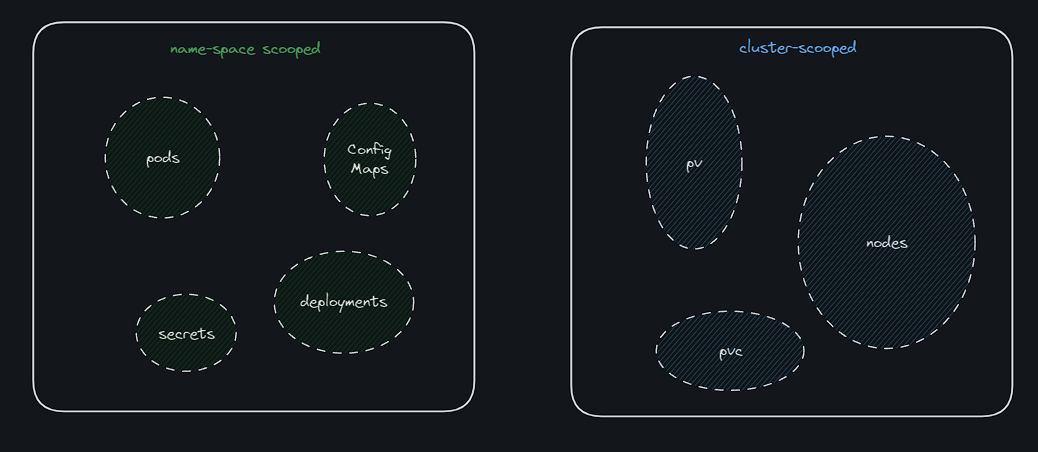

Cluster Roles and Role Bindings

- Cluster Roles are just like Roles except they are for a cluster scoped resources e.g nodes, pv, and pvc.

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: namespace: default name: cluster-administrator rules: - apiGroups: [""] #"" indicates the core API group resources: ["nodes"] verbs: ["get", "list", "create", "delete"]

- After creating the ClusterRole, you will need to link the user to it, for this we create an object called ClusterRoleBinding.

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: namespace: default name: cluster-administrator subjects: - kind: user name: cluster-admin apiGroup: rbac.authorization.k8s.io roleRef: kind: ClusterRole name: cluster-administrator apiGroup: rbac.authorization.k8s.io⚠️Note that you can create a ClusterRole for a namespace resource, but when you authorize a user to access Pods the user gets access to all Pods across the cluster.

- Cluster Roles are just like Roles except they are for a cluster scoped resources e.g nodes, pv, and pvc.

Image Security

- Using a private registry; you need to make a secret with the type

docker-registry, and put your docker server, username, password, and email.kubectl create secret docker-registry regcred \ --docker-server=private-registry.io \ --docker-username=chxmxii \ --docker-password=chxmxii01230 \ --docker-email=contact@chx.global.temp.domains

- You can call the secret using

imagePullSecretswhen pulling an image from a private registry;apiVersion: v1 kind: Pod metadata: name: myapp spec: containers: - name: myApp-container image: private-registry.io/apps/my-app imagePullSecrets: - name: regcred

- Using a private registry; you need to make a secret with the type

Security Contexts

- A security context defines privilege and access control settings for a Pod or container, and you may choose to configure the security settings at a container level or a POD level.

- If you configure it at a POD level the settings will be applied to all the containers within the POD.

- If you configure it at both the Pod and the container, the settings on the container will override the settings on the POD.

apiVersion: v1 kind: Pod metadata: name: my-web-app spec: # at the pod leve: # securityContext: # runAsUser: 1005 containers: - name: nginx image: nginx command: ["sleep", "1000"] securityContext: #at the container level runAsUser: 1005 capabilities: #caps are only supported a the container level add: ["SYS_ADMIN"]

Network Policies

- By default, all pods within the cluster can communicate with each other. which means no rules are stopping the pods from reaching each other.

- Network policies help control the flow of ingress and egress to a group of pods using labels and selectors.

- Network policies are stateful, meaning if the request is allowed, the response will be allowed automatically too. Note that the resulting network policy for a pod is the union of all the associated network policies and the order of eval(rule) does not matter.

Network Policy for the database pod;

apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: db-policy spec: podSelector: matchLabels: role: db policyTypes: - Ingress #allowing ingress traffic - Egress #allowing egress traffic ingress: - from: - podSelector: matchLabels: role: api #to restrict access to the DB pod within the current ns only. namespaceSelector: matchLabels: name: prod # allow ingress traffic from a specific ip addr - ipBlock: cidr: 192.168.5.10/32 ports: - protocol: TCP port: 3306 egress: - to: - ipBlock: cidr: 192.168.5.10/32 ports: - protocol: TCP port: 3306#LAB apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: internal-policy spec: podSelector: matchLabels: name: internal policyTypes: - Ingress - Egress egress: - to: - podSelector: matchLabels: name: payroll - podSelector: matchLabels: name: mysql # - podSelector: # matchExpressions: # - key: name # operator: In # values: ["payroll", "mysql"] ports: - protocol: TCP port: 8080 - protocol: TCP port: 3306 ingress: - {} #ref: https://stackoverflow.com/questions/62248909/how-does-matchexpressions-work-in-networkpolicy

LAB;

RBAC User Permissions;

There is existing Namespace applications .

- User

smokeshould be allowed tocreateanddeletePods, Deployments and StatefulSets in Namespace applications.

- User

smokeshould haveviewpermissions (like the permissions of the default ClusterRole namedview) in all Namespaces but not inkube-system.

- Verify everything using

kubectl auth can-i.

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

namespace: applications

name: smoke-rbac

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["create", "delete"]

- apiGroups: ["apps"]

resources: ["statefulsets", "deployments"]

verbs: ["create", "delete"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

namespace: applications

name: smoke-rb

subjects:

- kind: User

name: smoke

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: Role

name: smoke-rbac

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: smoke-crbac

namespace: {{nss}}

subjects:

- kind: User

name: smoke

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: view

apiGroup: rbac.authorization.k8s.iofor i in $(k get ns --no-headers | cut -d' ' -f1 | grep -v -E "kube-system|local-path-storage"); do sed "s/{{nss}}/$i/g" solution.yml | kubectl apply -f - ; doneusing declartive way;

k -n applications create role smoke --verb create,delete --resource pods,deployments,sts

k -n applications create rolebinding smoke --role smoke --user smoke

---

k get ns # get all namespaces

k -n applications create rolebinding smoke-view --clusterrole view --user smoke

k -n default create rolebinding smoke-view --clusterrole view --user smoke

k -n kube-node-lease create rolebinding smoke-view --clusterrole view --user smoke

k -n kube-public create rolebinding smoke-view --clusterrole view --user smokeNOTES;

A ClusterRole|Role defines a set of permissions and where it is available, in the whole cluster or just a single Namespace.

A ClusterRoleBinding|RoleBinding connects a set of permissions with an account and defines where it is applied, in the whole cluster or just a single Namespace.

Because of this there are 4 different RBAC combinations and 3 valid ones:

- Role + RoleBinding (available in single Namespace, applied in single Namespace)

- ClusterRole + ClusterRoleBinding (available cluster-wide, applied cluster-wide)

- ClusterRole + RoleBinding (available cluster-wide, applied in single Namespace)

- Role + ClusterRoleBinding (NOT POSSIBLE: available in single Namespace, applied cluster-wide)

NetworkPolicy;

piVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: np

namespace: space1

spec:

podSelector: {}

policyTypes:

- Egress

egress:

- to:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: space2

- ports:

- port: 53

protocol: TCP

- port: 53

protocol: UDP

---

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: np

namespace: space2

spec:

podSelector: {}

policyTypes:

- Ingress

ingress:

- from:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: space1